The Core Privacy Challenge in RAG

A standard RAG pipeline involves several stages: ingesting your documents, chunking them, creating numerical representations (embeddings), storing them in a vector database, and then using them at query time to provide context to an LLM.

Each step presents a potential privacy risk:

-

Data Ingestion & Storage: Where does your raw data live? Who has access to it? Is it encrypted both in transit and at rest?

-

The Embedding Process: Are your sensitive documents being sent to a third-party API for embedding? This could expose raw text to an external vendor.

-

The Vector Database: While embeddings are numerical representations, not raw text, they can sometimes be reverse-engineered. The relationship between vectors can also leak information.

-

The Generation Step: When a user asks a question, what data is sent to the LLM? Is it just the relevant snippets, or more? Is the LLM provider retaining this data?

Addressing these challenges requires more than just promises; it requires a transparent, verifiable, and robust architecture.

Our Blueprint: A Privacy-Preserving RAG Pipeline

Our architecture is built on the principle of "zero-trust" and "privacy by design." We assume no implicit trust and ensure that every component and process is explicitly designed to protect your data.

Let's walk through our pipeline, component by component.

1. Data Ingestion & Chunking: Your Data, Your Environment

From the very first step, we ensure your data's sovereignty. Our solution integrates directly with your secure data sources (like S3, Google Drive, or SharePoint) using secure, short-lived authentication tokens.

Crucially, the initial processing—the chunking of documents into manageable pieces—happens within a secure, isolated environment dedicated to you. Your raw documents are never stored persistently on our primary systems. They are processed in-memory and discarded immediately after chunking and embedding, adhering to the principle of data minimization.

2. The Embedding Stage: In-House and In-Control

This is one of the most critical privacy junctures. Many RAG providers rely on external, black-box embedding models (e.g., from OpenAI or Cohere). This means sending your proprietary data across the internet to a third party, where you lose control over its use and retention.

We do it differently. We utilize state-of-the-art, open-source embedding models hosted within our own secure infrastructure. This means your data is never sent to an external AI provider for embedding. We manage the entire process, ensuring a secure and confidential transformation of your text chunks into vector embeddings.

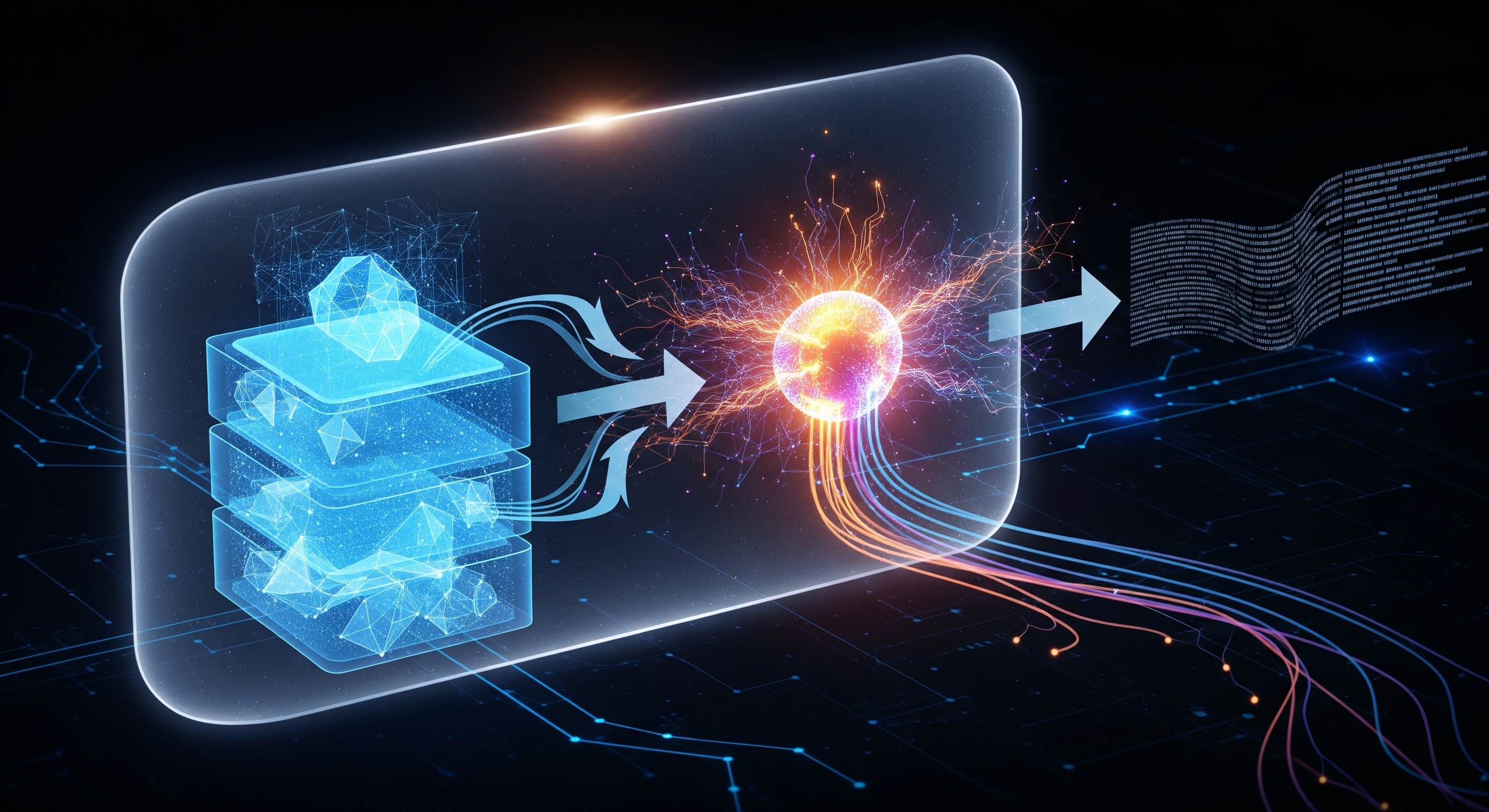

The process looks like this:

Document Chunk (Text) rightarrow [Your Startup Name]'s Secure Embedding Model rightarrow Vector Embedding (e.g., a 1024-dimensional vector vinmathbbR1024)

This self-hosted approach not only guarantees privacy but also allows us to fine-tune models specifically for the types of documents you work with, improving relevance without compromising security.

3. The Vector Store: Encrypted and Isolated

Once the vector embeddings are created, they are stored in our specialized vector database. We ensure robust security here through multiple layers:

-

Tenant Isolation: Your data is stored in a logically and physically isolated database instance. There is no possibility of cross-contamination between customer data.

-

Encryption at Rest: All data in the vector database, including the vectors and their associated metadata, is encrypted using the AES-256 standard.

-

Encryption in Transit: Any communication between our application services and the vector database is secured using TLS 1.3.

4. The RAG Loop: Anonymized and Ephemeral

When a user submits a query, our system performs the "retrieval" step, finding the most relevant data chunks from your vector store. Here’s how we protect your privacy during the final, critical "generation" step:

-

Contextual Snippets Only: We send only the retrieved, relevant text snippets to the LLM as context—never the user's entire document base.

-

LLM Provider Agnosticism & Anonymization: We partner with leading LLM providers who have strict zero-data-retention and zero-training policies. Before sending the request, we can apply an additional layer of anonymization to the snippets, scrubbing them of Personally Identifiable Information (PII) where applicable.

-

API-Level Agreements: We have robust contractual agreements with our LLM providers that legally prohibit them from storing your queries or using them to train their models. The data sent for generation is ephemeral and exists only for the duration of the inference call.

Beyond the Pipeline: Holistic Security

A secure pipeline is only as strong as the environment it operates in. Our commitment to your trust extends to our entire platform:

-

Strict Access Controls: We enforce Role-Based Access Control (RBAC) across our platform, ensuring that only authorized individuals within your organization can access specific data sets.

-

Comprehensive Auditing: We provide detailed audit logs, so you have a transparent and immutable record of who accessed what, and when.

-

Compliance Ready: Our architecture is designed to meet and exceed the standards of major compliance frameworks like SOC 2 Type II and GDPR.

The Bottom Line: Your Competitive Edge is Safe With Us

In the age of AI, your data is your most valuable asset. It's your institutional knowledge, your unique market insights, and your competitive differentiator.

By choosing a RAGaaS provider, you are not just buying a piece of technology; you are entering into a partnership built on trust. Our privacy-first architecture is our commitment to you that your data will be protected, your privacy will be respected, and your competitive edge will remain securely yours.