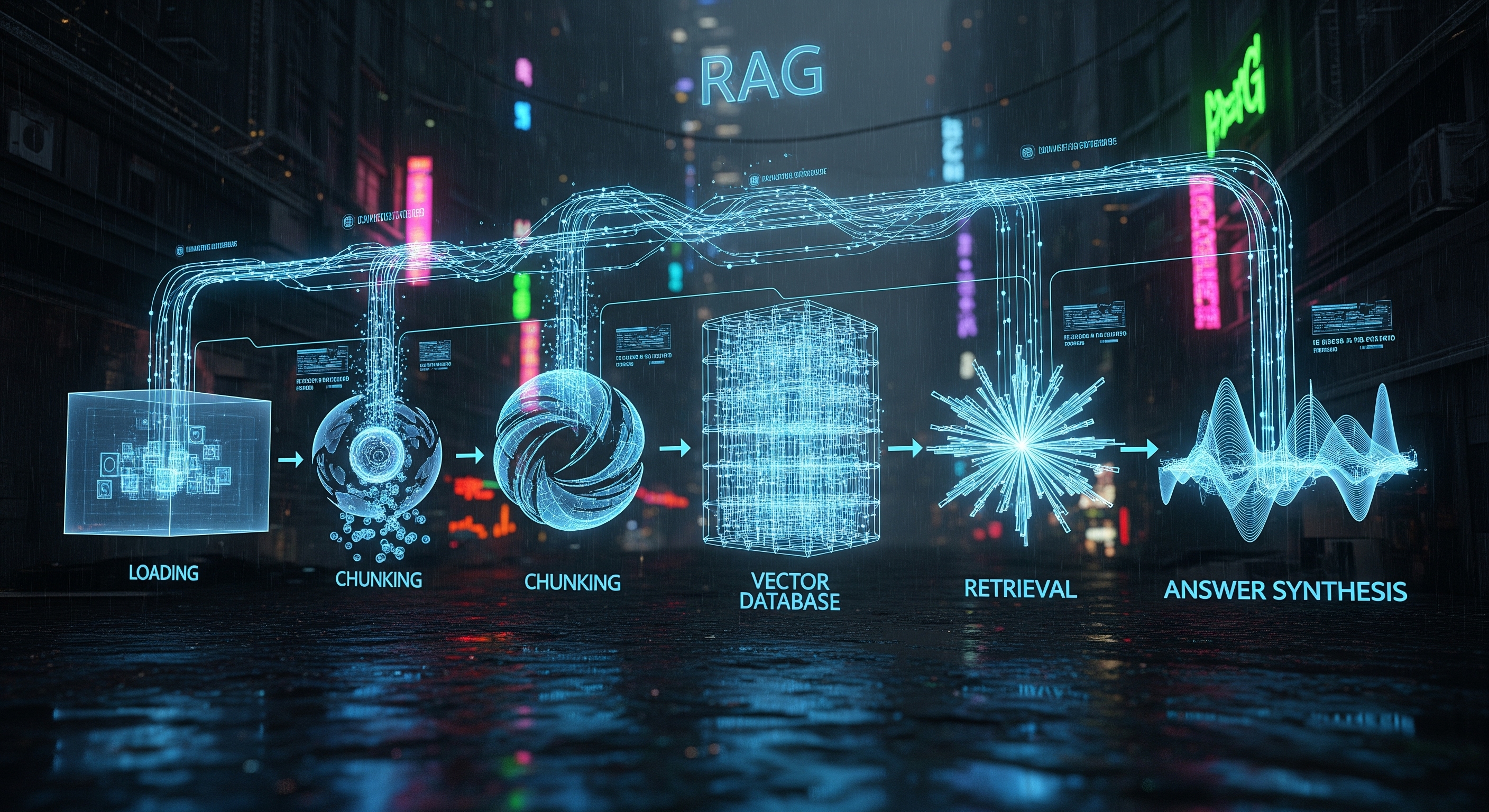

Anatomy of a RAG Pipeline: From Vector Databases to Answer Synthesis

Stage 1: Loading and Chunking

First things first, you need to feed your data into the system. This is the loading stage. The data can come from anywhere: PDFs, Word documents, websites, a Notion database, or even a Slack channel. The pipeline ingests this raw, unstructured data.

However, LLMs have a limited context window—they can only process a certain amount of information at once. You can't just drop a 500-page manual into the prompt. That's where chunking comes in. The loaded data is strategically split into smaller, manageable pieces or "chunks." This isn't just a random chop; effective chunking preserves the semantic meaning of the text, often by splitting it into paragraphs or sections.

-

Goal: Ingest raw data and break it into meaningful, bite-sized pieces for processing.

Stage 2: The Indexing Stage: Welcome, Vector Databases! 🗂️

Once your data is chunked, the system needs a way to understand and organize it. This is the indexing stage, and it's where the "vector" in "vector database" becomes crucial.

Each chunk of text is converted into a numerical representation called a vector embedding. Think of this as a sophisticated digital fingerprint. An embedding model (a specialized neural network) reads a chunk of text and captures its semantic meaning as a list of numbers (a vector). Chunks with similar meanings will have vectors that are "close" to each other in mathematical space.

These vectors are then stored in a specialized database designed for this exact purpose: a vector database. This database is highly optimized to store and search through millions or even billions of these vectors at incredible speeds.

-

Goal: Convert text chunks into numerical vectors (embeddings) and store them in a searchable vector database.

Stage 3: The Retrieval Stage: Finding the Right Context 🔍

Now, let's say you ask a question, like, "What was our Q2 revenue in Europe?" This is where the "Retrieval" in RAG happens.

Your query is also converted into a vector embedding using the very same model. The RAG system then uses this query vector to search the vector database. It performs a similarity search (often using an algorithm like Cosine Similarity) to find the text chunks whose vectors are closest to your query's vector.

The result is a ranked list of the most relevant chunks of text from your original documents. These aren't just keyword matches; they are chunks that are semantically related to your question. This is the crucial context the LLM needs to formulate a precise answer.

-

Goal: Take the user's query, convert it to a vector, and retrieve the most relevant information chunks from the vector database.

Stage 4: The Synthesis Stage: Generating the Final Answer ✨

With the most relevant context retrieved, we're at the final stage: answer synthesis.

The RAG pipeline takes your original query and the retrieved text chunks and bundles them into a new, augmented prompt for the LLM. The prompt essentially says: "Using the following information, please answer this question."

-

Original Query: "What was our Q2 revenue in Europe?"

-

Retrieved Context: [Chunk 1: "Q2 financial report shows European revenue at €5.4M...", Chunk 2: "Summary of European operations highlights a 15% YoY growth in Q2...", etc.]

-

LLM: The LLM processes this combined information and generates a coherent, human-like answer. For example: "Our Q2 revenue in Europe was €5.4 million, which represents a 15% year-over-year growth."

By providing this specific, grounded context, the LLM is far less likely to "hallucinate" or make up facts. Its answer is now based on your data, making it reliable and trustworthy.

-

Goal: Combine the user's query with the retrieved context and feed it to an LLM to generate a final, accurate answer.

Conclusion: The Power of a Well-Oiled Pipeline

The RAG pipeline is a powerful engine that transforms static documents into a dynamic, conversational knowledge source. By seamlessly blending the retrieval of specific information with the generative power of LLMs, it offers the best of both worlds: accuracy and intelligence.

Understanding this anatomy is key to building powerful AI applications. Here at [Your RAG SaaS Startup Name], we've perfected this pipeline, providing a robust and scalable platform so you can deploy powerful RAG solutions without the hassle. Ready to put your data to work? Get in touch!